Are Watermarked Large Language Models More Prone to Hallucinations?

In this blog post, I investigate whether watermarked LLMs are more likely to “hallucinate,” or make up facts, because of limitations imposed by the watermarking scheme.

Introduction

As LLMs grow in capabilities, it is becoming more and more difficult to tell apart human-written from AI-generated content. Current post-hoc AI detection tools like GPTZero, which are easy to bypass and can be biased against non-native English speakers, are neither robust nor fair. Watermarking schemes suggest a more secure and unbiased method of detecting LLM-generated content, but introduce potential quality degradation. In this blog post, I investigate whether watermarked LLMs are more likely to “hallucinate,” or make up facts, because of limitations imposed by the watermarking scheme. I formulate a nuanced research question, explain assumptions made and my experimental setup, present an analysis of my results, and present next steps. Overall, although I do not obtain statistically significant results, I do provide statistical evidence that hallucinations in watermarked LLMs are worth studying, with interpretable qualitative results that I explain with fundamental ML concepts.

Background

The need for AI detection algorithms

Deepfakes. AI-assisted academic plagiarism. Bots on social media spreading fake news. These are just a couple of the real-world problems brought about by the recent advancement in large language model capabilities that make it easy for malicious users to spread misinformation, while making it hard for social media platforms or governments to detect their AI origin. Thus, detecting AI-generated content in the wild is becoming one of the hottest research fields in AI. In fact, the White House recently commissioned an executive order

Some signs have already appeared that point to the answer being “no.” When ChatGPT first released to the public, coding Q&A site StackOverflow temporarily banned

Some AI detection tools, such as GPTZero

So is it all doomed? Will we reach a state of the world in which people can’t trust anything they see on the internet to be human-generated?

Not quite. New watermarking algorithms make it possible to trace back any text generated by specifically-watermarked LLMs with high accuracy and with low false-positive rates, and with considerable amount of effort required to modify the output of such an LLM without also degrading the quality of the output.

So what is watermarking?

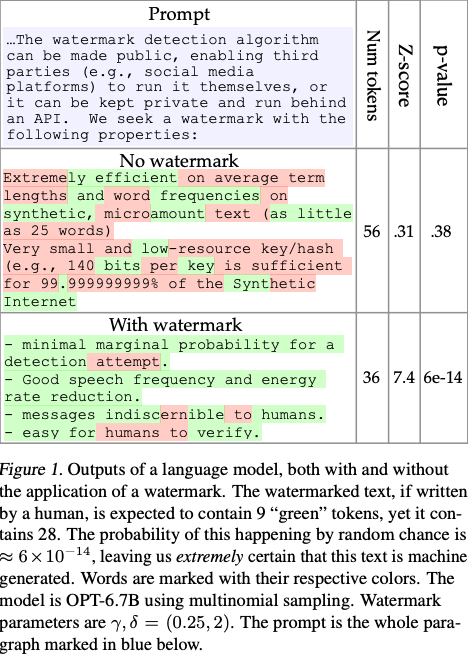

Watermarking, in the context of LLMs, is the process of modifying an LLMs generation process such that signals are embedded into generated text that are invisible to humans but algorithmically detectable. The key difference between watermarking and post-hoc detection algorithms like GPTZero is that post-hoc detectors rely on text outputted by LLMs to sound “artificial,” and as LLM capabilities grow, this is unlikely to hold. On the other hand, watermarking schemes work regardless of the capabilities of the underlying LLM, which make them more robust to advancements in AI. The watermarking scheme designed in A Watermark for Large Language Models (Kirchenbauer, Geiping et al.)

The existence of an undetectable, unbreakable, and accurate watermarking scheme would be incredible! By watermarking any LLM before its release, any text generated by the LLM would contain statistical signals that prove its AI origin, making it difficult for adversaries to pass off LLM-generated content as human-generated. Furthermore, because watermarking schemes rely on detecting signals associated with each LLM’s watermarking process and not by analyzing the perplexity of text, human-generated content would rarely be flagged as AI-generated. Unfortunately, the recent paper Watermarks in the Sand: Impossibility of Strong Watermarking for Generative Models (Zhang et al.)

So if an attacker is willing to spend lots of time and effort, they can break any watermarking scheme. Still, maybe this barrier is enough to deter most attackers. Then, why wouldn’t we watermark every LLM released to the public?

Quality degradation in watermarked LLMs

The truth is, because watermarking schemes force a LLM to preferentially sample from a pool of “green” tokens, the quality of the output of watermarked LLMs may decrease. To understand the intuition behind this, here’s a short clip from “Word Sneak with Steve Carell”: link

“We weren’t dressed properly for moose-watching or zucchini-finding… I for one had the wrong kind of pantaloons on.”

Steve and Jimmy were given cards with random words and had to work them into a casual conversation. Similarly, one can imagine an LLM generating odd-sounding sentences in order to adhere to a watermarking scheme.

The effects of quality degradation are amplified the smaller the space of high-quality outputs is. For example, the prompts “What is 12 times 6?” or “What is the first section of the U.S. Constitution?” have only one accepted answer, forcing a watermarked LLM to either give up on watermarking the output or hallucinate incorrect answers.

The latter bad outcome is the one I will investigate further in this blog post: Are watermarked LLMs more prone to hallucinations? In particular, I investigate if there are tradeoffs between quality of outputs and watermark security. Lastly, I perform a qualitative analysis of watermarked outputs, and explain any interpretable trends caused by the watermarking scheme.

Experiment

Setup

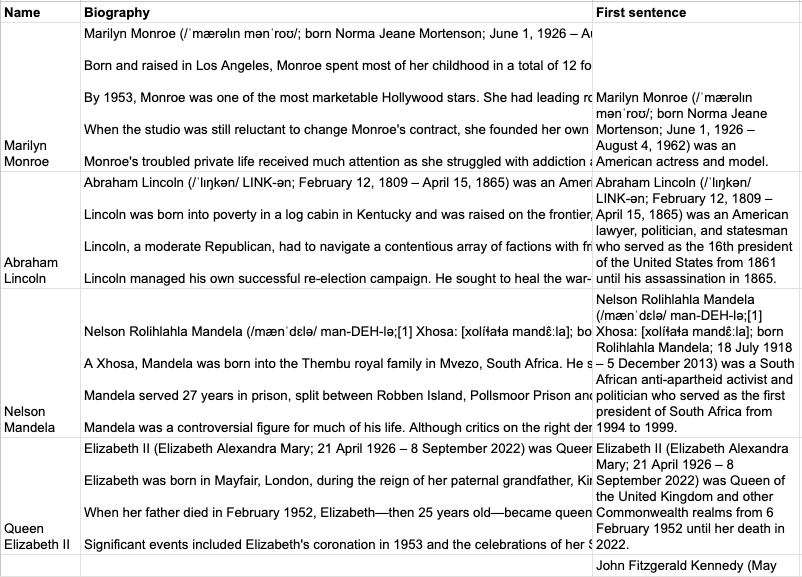

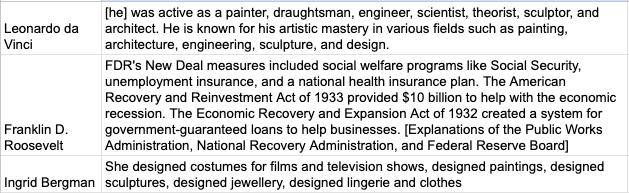

I investigate my hypothesis by experimenting with unwatermarked and watermarked LLMs. I outline my experiment here: first, I ask an unwatermarked LLM to generate biographies for 100 famous people. I ask an evaluation oracle, aka GPT 3.5, to count the number of mistakes in each generated biography. This serves as my control group. Then, I create three experimental groups, each of which correspond to a watermarked LLM with varying degrees of watermarking security. I ask GPT 3.5 to count the number of mistakes by each of the watermarked LLMs, and perform statistical Z-tests to conclude whether or not watermarked LLMs are more likely to hallucinate.

I now walk through the steps of my experiment in more depth, with commentary on any decisions or tradeoffs I made in the process. Hopefully anyone reading this can follow what I did to replicate, or even build upon, my results!

My coding environment was Google Colab Pro, and its V100 GPU was sufficient to run all my code—a complete runthrough of my final Jupyter notebook would take a bit over an hour. The watermarking scheme I sought to replicate can be applied to any LLM where the watermark has access to the last layer of logits, so I looked into a variety of open-source LLMs. Ultimately, I decided on OPT (1.3 billion parameters)

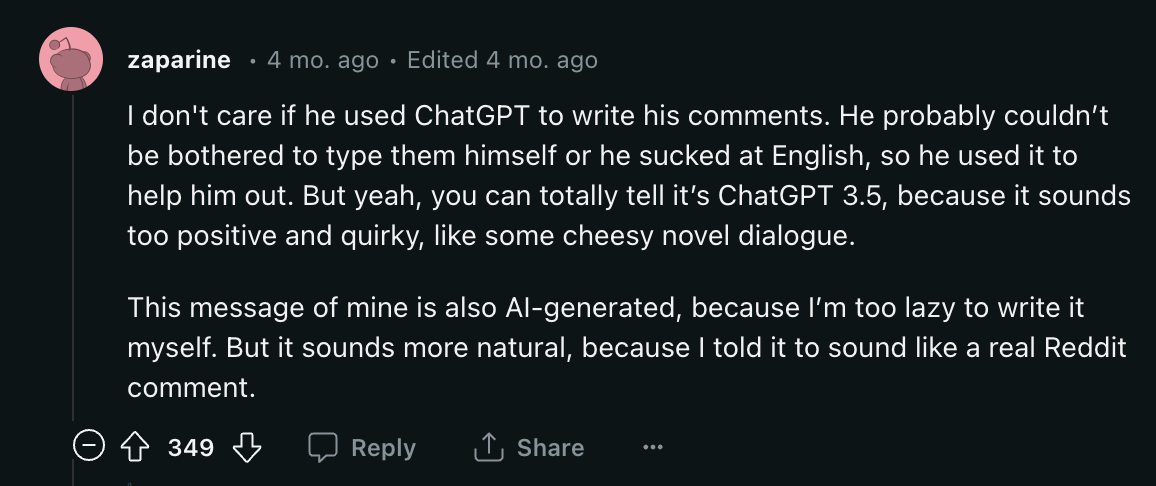

For my experiment, I needed a dataset of biographies of famous people. Unfortunately, I couldn’t find one publicly available after a few hours of searching, so I did the next best thing: I made my own. Using a list of 100 famous peoples’ biographies I found on a website

Lastly, I needed an evaluation oracle to count up the number of factual mistakes in each generated biography. I decided to make a tradeoff between accuracy and efficiency by letting ChatGPT do the work for me instead of manually cross-checking sample biographies with their Wikipedia biographies. After a bit of research into OpenAI’s APIs and pricing plans, I settled on the GPT 3.5-turbo API, since I expected to generate 600k tokens for my experiment, which would be a bit less than $1 in costs. With more funding, I would have probably used GPT 4, but I checked and was satisfied with the outputs of GPT 3.5-turbo.

Watermarking scheme implementation

With the experimental variables of open-source model, dataset, and evaluation oracle decided upon, I began to implement the watermarking scheme detailed in A Watermark for Large Language Models. The watermarking scheme is made up entirely of two components: a watermarking logits processor that influences how tokens are sampled at generation time, and a watermark detector that detects if a given piece of text contains a watermark. There were also several tunable parameters detailed in the watermarking paper, but the two of interest are gamma and delta.

Gamma represents the breadth of the watermark in terms of vocabulary: a higher gamma includes more words in the “green” pool, making responses sound more natural but may dilute the watermark’s detectability, while a lower gamma focuses on fewer words, increasing its detectability but potentially negatively affecting the output. The authors of the watermarking paper suggested a value for gamma between 0.25 and 0.75.

Delta represents the intensity of the watermark, or how strongly the watermark prefers “green” tokens to “red” tokens at each step of the generation process. The higher the delta, the more evident the resulting watermark. The watermarking paper suggested a value for delta between 0.5 and 2.0.

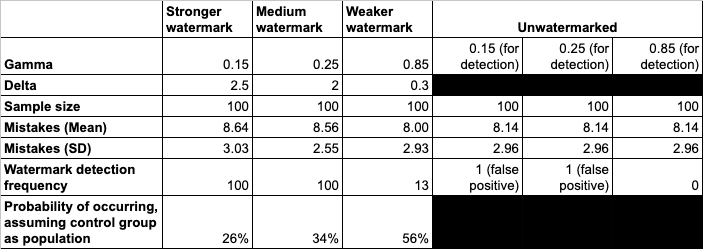

Tuning these parameters, I created three different experimental groups, each corresponding to a different level of watermarking strength: strong, medium, and weak. The exact values of gamma and delta I used can be tinkered with; my choices were based on what empirically had the best effects.

I left the detector algorithm provided by the watermarking paper mostly untouched, except for the Z-threshold. I tuned it down to z=2.3 so the detector would be more likely to say a piece of text was watermarked for comparisons between different watermarking strengths, but this threshold still required 99% confidence. Additionally, the detector algorithm takes as input gamma, which is the same gamma used to generate the watermarked text I am attempting to detect. This is a key parameter that differentiates a watermark detector from a general post-hoc AI detector. The gamma seeds the watermarking scheme, so that during the detection process, we can work backwards to determine if the token sampling adheres to the given gamma value. This ensures that human-written text that sounds bland or like a non-native English speaker won’t be misclassified as AI-generated, resulting in a low false-positive rate.

Prompting my models

First, I needed to prompt my open-source model to generate biographies of famous people. Since the version of OPT I used is a Causal LM, not an Instruct LM, I needed to prompt it with a sentence that would make it most likely to continue where I left off and generate a biography of the specified person. After some testing, I settled on the following prompt:

However, I noticed that the watermarked LLMs were initially outputting repeated phrases, e.g. “Barack Obama was the first African-American president of the United States. Barack Obama was the first African-American president of the United States.” Although this wasn’t technically hallucination, I wanted the output to look like a real biography, so I tuned two hyperparameters used during text generation: no_repeat_ngram_size=3 and repetition_penalty=1.1 to discourage repetitive phrases.

Next, I needed to prompt my evaluation oracle, GPT 3.5, to evaluate sample biographies. Since GPT 3.5 is an Instruct model, I can directly ask it to evaluate a given biography. I decided on the following prompt:

I ask GPT 3.5 to clearly list out each detected mistake and their corresponding correction in order to reduce the likelihood of it hallucinating, as well as allowing me to manually verify its evaluations.

Results

Quantitative results

After generating four biographies for each person—one unwatermarked control sample and three watermarked samples with different watermarking parameters—I evaluate them against our GPT 3.5 evaluation oracle.

On average, the unwatermarked control LLM generates biographies that contain 8.14 mistakes each. The strongest watermarking setting has a mean of 8.64 mistakes, the medium watermark has 8.56 mistakes on average, and the weakest watermark has 8.00 mistakes on average. Clearly the weakest watermarked LLM doesn’t hallucinate any more than the control group LLM—but it does have a 13% detection rate, which is pretty substandard for a watermarking scheme. The medium and strongest watermarks perform slightly worse than the control group LLM, and by performing Z-tests on the measure statistics (never thought AP Stats would come in handy), I conclude that the probabilities of observing the results we got for the strong and medium watermarked LLMs are 26% and 34% respectively. So, although these probabilities aren’t statistically significant, they do slightly imply that watermarked LLMs hallucinate more often, and the effect is especially visible with stronger watermark settings.

We also see that our unwatermarked biographies had a false positive rate of approximately 1%. This can be attributed to the tuning I made to the Z-threshold, from 4.0 to 2.3. Indeed, I made the change knowing that a Z-threshold of 2.3 reflects 99% confidence, so our FPR of 1% is in line with this change. If I had left the Z-threshold to 4.0, we would have a FPR of approximately 0.003%. However, with a higher Z-threshold, the weakest watermarked LLM would consequently have an even lower successful detection rate, so I made this tradeoff of having one or two false positives in order to catch more watermarks. This also lets us see more clearly how even though weaker watermarks are less detectable, some trace of the watermarking signal still remains.

Qualitative results

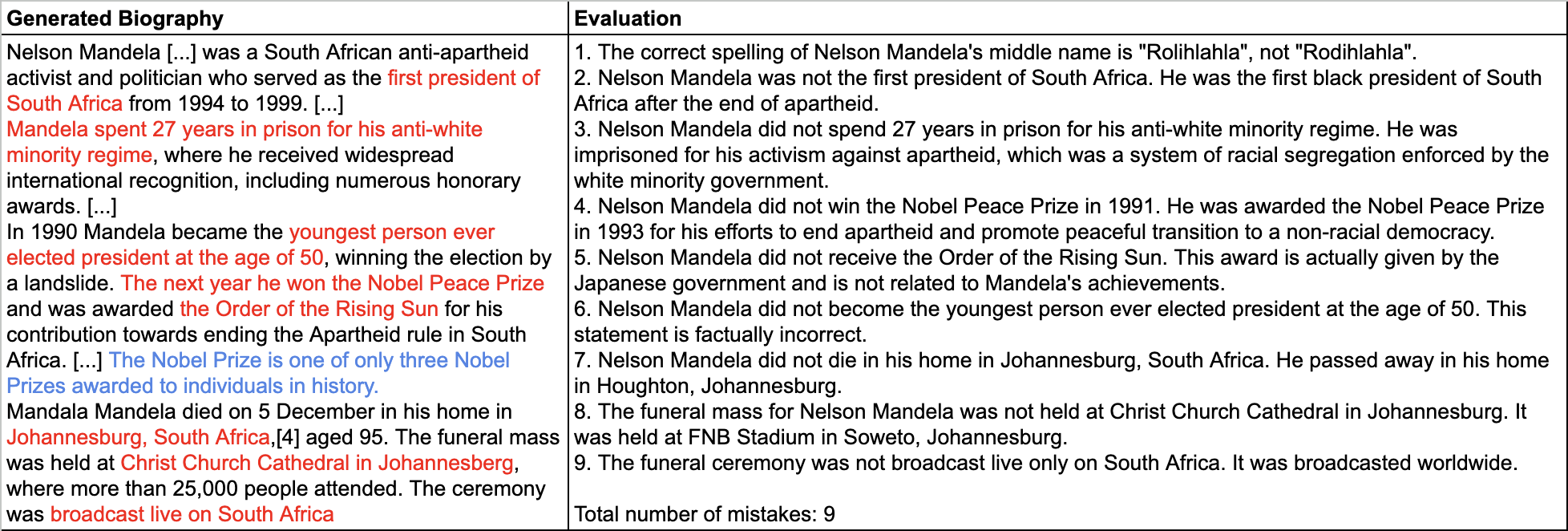

In addition to quantitative results, I perform a deeper, qualitative analysis on a biography generated for a specific person. I chose the strong watermarked biography for Nelson Mandela because of the interesting and interpretable trends we can see:

I highlighted the errors pointed out by our evaluation oracle in red text for ease of comparison. Note that there may be additional errors not caught by GPT 3.5. Generally, we see that the errors occur from mixing up dates, names, ages, locations, etc., and are not completely made up facts. In fact, the biography does capture a relatively sound summary of Mandela’s life. I posture that the hallucinations we see are mostly simple fact mismatches because the watermarking schemes we impose on OPT still give it the flexibility to tell a good story of Mandela’s life, but when it comes down to token-by-token sampling, our LLM may be forced to generate the wrong date or name in order to adhere to the “green” token preference scheme.

I also wanted to highlight the blue text. The sentence “The Nobel Prize is one of only three Nobel Prizes awarded to individuals in history” not only is incorrect but also doesn’t add much substance to the biography. Here are three other hand-picked sections of generated biographies that aren’t informative to the person’s biography:

In each piece of text, the reported facts may not be incorrect, but they take up valuable space that we would otherwise like to be used to introduce new facts about the person. So even if these facts aren’t flagged as factual inaccuracies by our evaluation oracle, they do demonstrate a degradation in model output, raising the issue of response quality degradations in addition to hallucinations.

Theoretical underpinnings of results

Taking a theoretical perspective, what exactly causes LLMs to hallucinate? To answer this question, we cite one of the important topics covered in class: reward misspecification. If, during training time, we give low error loss to outputs that sound similar to our training data, we’re not necessarily training the LLM to be more accurate. Instead, we’re training the LLM to generate output that is more likely to be accepted as “close-enough” to the training data. When we ask ChatGPT to write a poem or reply to an email, being “close-enough” is usually fine. But when we need it to be 100% accurate, such as solving a math problem or generating a biography for a real person, being “close-enough” doesn’t quite make the cut.

Furthermore, the auto-regressive manner in which LLMs generate text means it samples the “most-likely” token, based on previously seen tokens. If our LLM starts to generate FDR’s most important New Deal measures, the “most-likely” tokens to follow might be explaining each of the New Deal measures in detail. But this isn’t what we want out of a biography of FDR!

Both of these problems—hallucinating false information and generating uninformative facts—are observed in our experiments. But unfortunately, it’s hard to reduce one issue without exacerbating the other. I attempted to decrease the temperature parameter in OPT’s text generation, but this resulted in OPT generating strings of run-on, non-informative sentences, such as “Marilyn Monroe starred in several films, including Dangerous Years, Scudda Hoo! Scudda Hay!, Ladies of the Chorus, Love Happy…” because each additional film was the most likely follow-up to the previously generated tokens. Similarly, increasing the temperature might generate text that sounds more “human-like,” but upon closer inspection, would be riddled with factual inaccuracies.

Conclusion

Further Work

There’s a couple of improvements I would have made if I had more time or more compute to work with. With more time, I would have liked to learn how to scrape data from webpages, so I could create a dataset of a thousand famous peoples’ biographies. Then, I could run my experiments with a larger sample size, allowing me to produce more statistically significant results. However, even with access to a larger dataset, I would have been bottlenecked by compute. Using Colab Pro’s V100 GPU, I estimate that generating biographies and evaluating them for 1000 people would take at least 10 hours of runtime.

If I had access to more powerful GPUs, I also would have investigated a more nuanced research question: are bigger, more capable LLMs that have watermarking schemes applied to them less prone to hallucinating? I would have liked to run my experiments using a larger open-source LLM, such as LLaMa 65B, instead of OPT 1.3B, to see if watermarking schemes still negatively affect an LLM’s ability to perform tasks, when the base LLM is much more capable.

What I learned

As this project was my first self-driven research experiment, I faced many challenges, but also learned so much. Probably the most important thing I learned is that compute is important, but it’s not an end-all-be-all. There’s tons of open-source models out there that can be run on a V100, and Google Colab Pro offers it at an affordable price. I also learned how important it is to define a well-scoped research problem, and how chatting with others can help you gain fresh insights on roadblocks.

I found that my work towards this project was structured much differently than how I would approach a problem set. With a pset, much of the starter code is provided, and in particular, the code to import datasets, process them, and visualize results are all provided. In this project, most of my time was spent making design decisions: which dataset should I use, how should I format my results, what hyperparameters should I use. Although the raw number of lines coded in my final notebook might not be the most, I can explain my reasoning behind each line of code clearly, and I think this is a result of the thorough research I performed.

Lastly, I learned that tackling an unanswered question in research is tractable for most students with some programming experience and interest in a scientific field. I didn’t have the most extensive ML background, nor any prior undergraduate research experience, but just by reading some papers on watermarking and writing down the questions that popped into my head, I came up with some viable research questions that could be tackled by an independent research project.

I’m very thankful to my friends Franklin Wang and Miles Wang for helping me configure my coding environment and keeping me motivated throughout the project, and also to the TAs I spoke with during the ideation and scoping stage. To other students reading this blog post who may want to get started doing ML research but aren’t sure how to get started, I encourage you to try replicating some papers with code! Papers With Code

Supplemental Material

In this Github repository, you can access the dataset I made of famous people’s biographies, the code I used to generate my results, and the CSV files of results.