Learning a Lifted Linearization for Switched Dynamical Systems

A final project proposal for 6.s898 in fall 2023

Introduction

All models are wrong, but some are useful. —George Box

Deep neural networks are incredibly capable of generating models from data. Whether these are models that allow for the classification of images, the generation of text, or the prediction of a physical system’s dynamics, neural networks have proliferated as a favored way of extracting useful, predictive information from set of data

In robotics, the speed at which a model can be run and its explainability can be just as important as the accuracy of its predictions. Techniques such as model predictive control can enable remarkable performance even when they’re based on flawed predictive models

Nevertheless, this kind of linearization has its own weaknesses. Chief among them is the inherently local nature of the approach: a Taylor series must be taken around a single point and becomes less valid further away from this location. As an alternative, lifting linearization approaches inspired by Koopman Operator theory have become more commonplace

\(f(x)|_{x=a}\approx f(a)+\frac{f'(a)}{1!}(x-a)\)

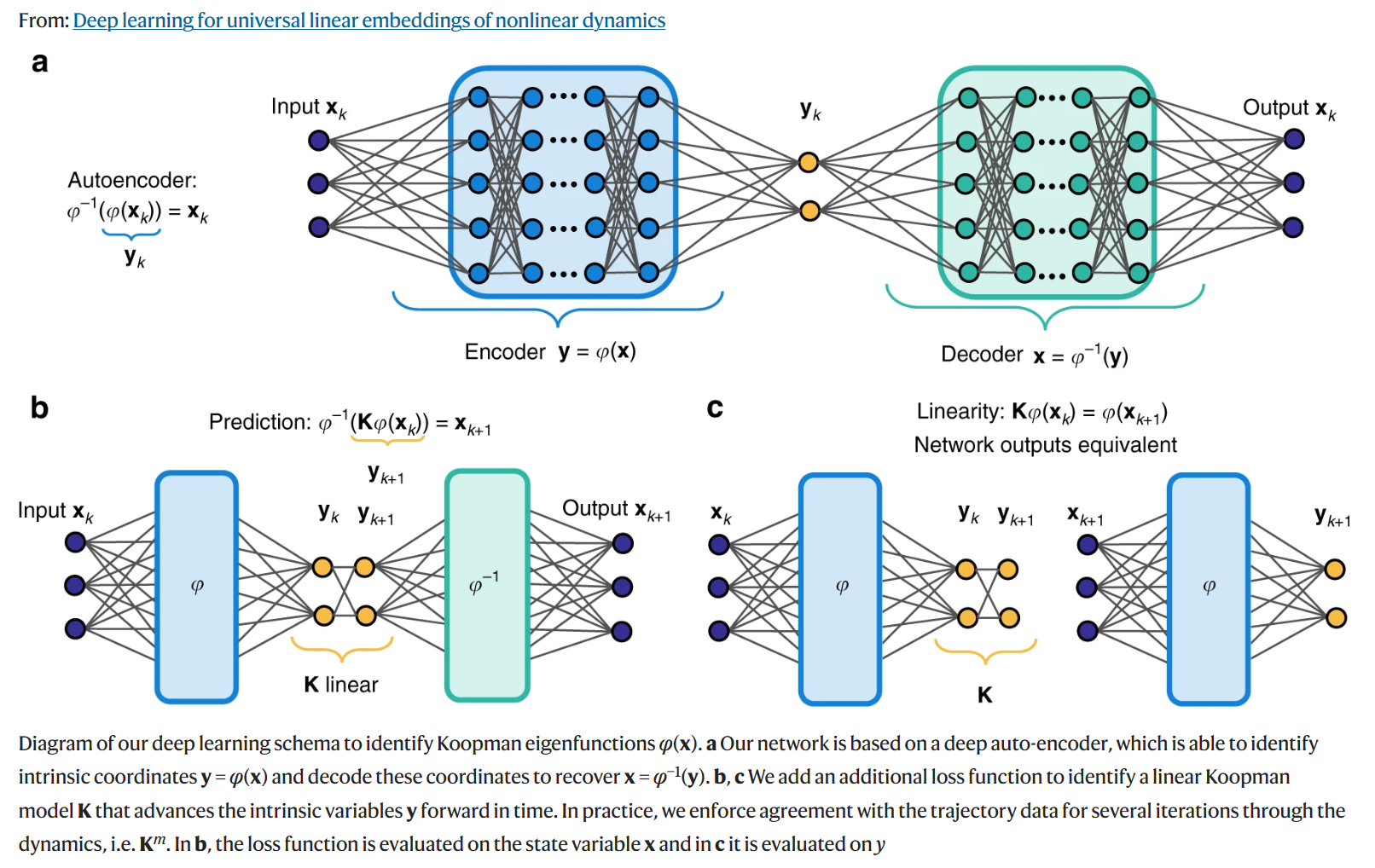

Deep neural networks have emerged as a useful way to produce these lifted linear models

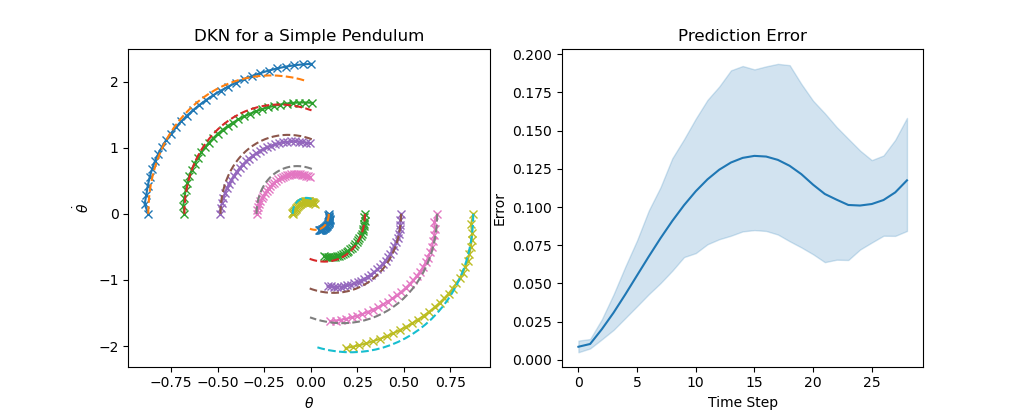

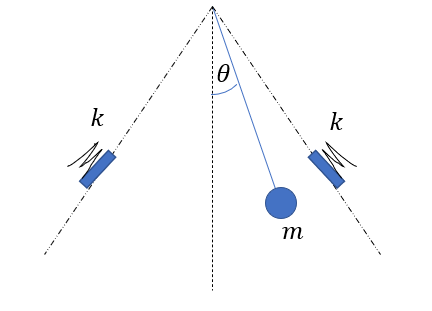

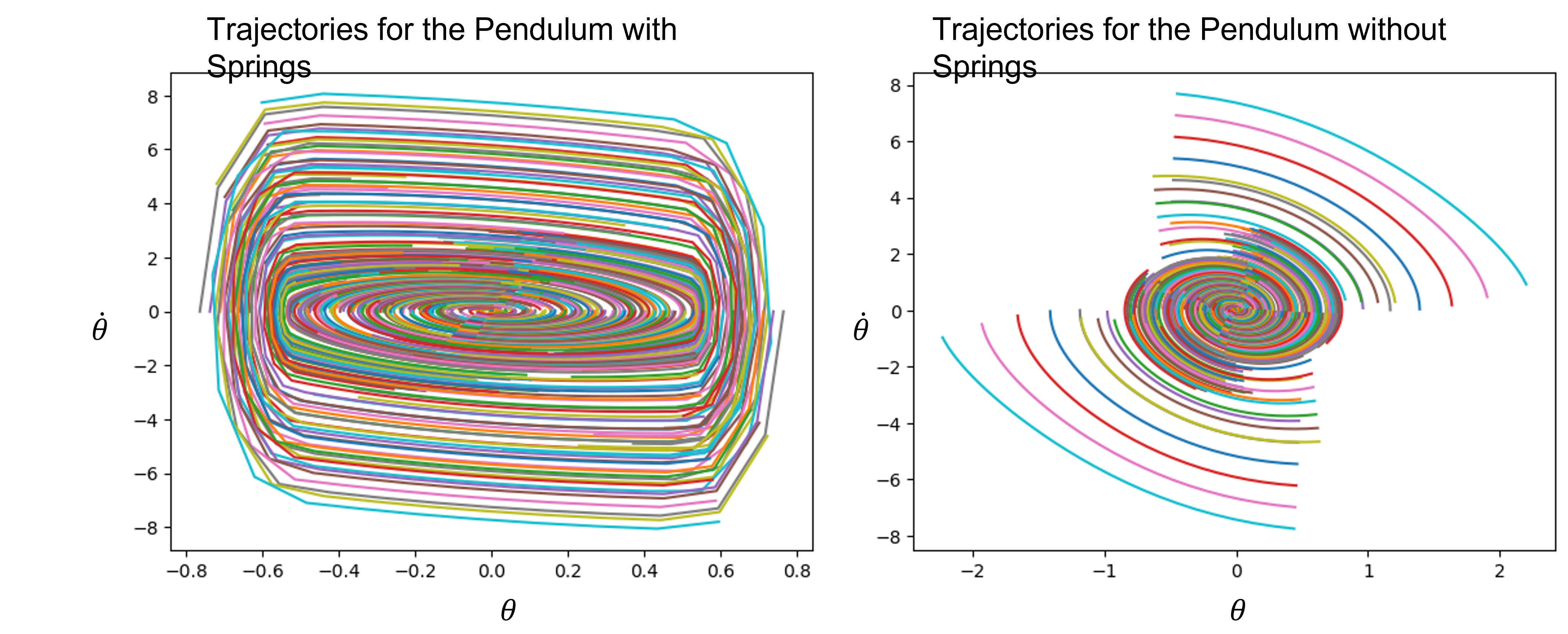

While the potential of DKNs has already been explored in recent years, the field is still being actively studied. In this blog, I am interested in exploring how a DKN can be used to model a particular kind of a dynamical system: one with piecewise dynamics that vary discretely across state space. These systems are inherently challenging for traditional, point-wise linearization techniques. To explain this, we can consider an example inspired by our old friend, the simple pendulum.

Consider a pendulum as before, but with the addition of two springs located at $\theta=30\degree$ and $\theta=-30\degree$. If we to consider a point arbitrarily close to one of these springs, say at $\theta=29.99…\degree$, then a Taylor series about this point – even with infinite terms – would not be able to accurately represent the dynamics when the spring is engaged. In contrast, a lifted linearization may better model such a system thanks to its ability to incorporate information beyond a single point.

\(\begin{align} \ddot\theta =f(\theta,\dot\theta) =\begin{cases} -g\sin{\theta}-b\dot\theta, & \theta\in [-30^\circ,30^\circ]\\ -g\sin{\theta}-b\dot\theta-k(\theta+30), & \theta<-30^\circ\\ -g\sin{\theta}-b\dot\theta-k(\theta-30), & \theta>30^\circ \end{cases} \end{align}\)

Although that isn’t to say that a brute-force implementation of a DKN would necessarily be all too successful in this case either. Piecewise, switched, or hybrid systems (terminology depending on who you ask) are composed of particularly harsh nonlinearities due to their non-continuous derivatives. These can be difficult for lifted linearization approaches to model

As a bit of a spoiler for the conclusion of this report, we don’t end up seeing any noticeable improvement from pre-training the DKN. Nevertheless, the process of experimenting with the proposed approaches was an insightful experience and I am happy to share the results below.

Proposed Approaches

I experimented with two approaches for pre-training our DKN, one inspired by curriculum learning

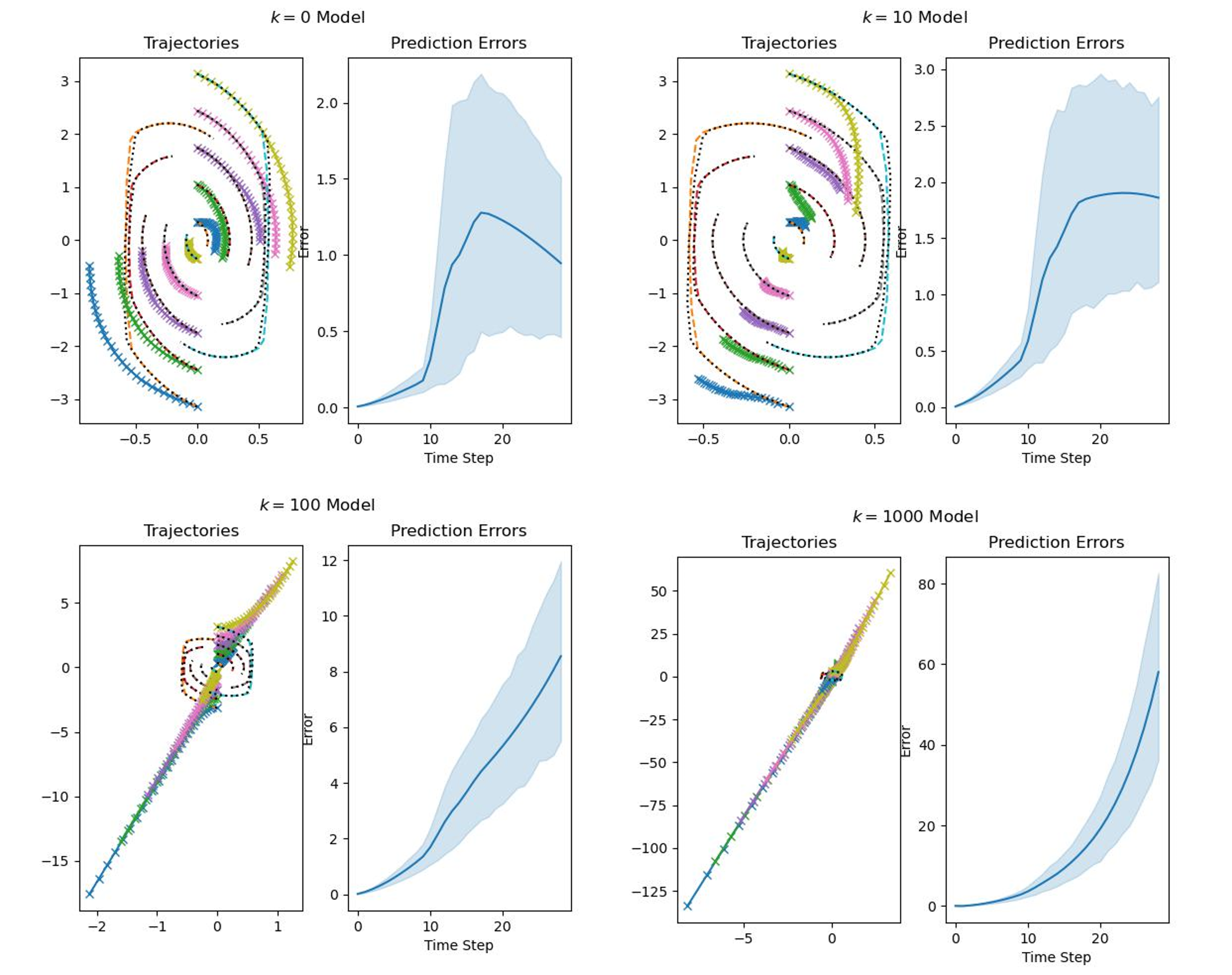

In the case of applying curriculum learning, we considered an approach with a data-based curriculum. In these cases, the difficulty of the training data is gradually increased over time. This has the potential benefit of allowing a model to more readily learn a challenging task, while also preventing a situation where a model is not sufficiently ‘challenged’ by new data during the training process. Our curriculum learning approach sought to take advantage of DKNs’ already good performance for the standard pendulum case. Intuitively, we identify the spring’s stiffness as the primary source of increased difficulty in our toy system. With this in mind, I created four data sets with different values for the spring constant, $k=0,10,100,1000$. A single model was then trained sequentially on these data sets. If our intuition is correct, we would expect to see the model gradually learn to account for the presence of the spring while maintaining the dynamics of a simple pendulum closer to the origin.

For the second approach tested in this project, it is necessary to consider what an observable is meant to represent in a lifted linearization. As an additional piece of terminology, the function which is used to generate a given observable is referred to as an observable function

Based on this understanding of Koopman eigenfunctions, we are motivated to see if a DKN could be coaxed into more readily learning spatially-relevant observables. If we consider our system of interest, the pendulum with springs, we posit that different regions of state space would be primarily influenced by different eigenfunctions. In particular, the larger central region where the pendulum’s dynamics are independent of the springs may be expected to be affected by a set of eigenfunctions with a lower spatial frequency and a global relevance. That is, eigenfunctions which better represent the dynamics of the system averaged throughout the state space and which may be valid everywhere – even when the springs are engaged, the natural dynamics of the pendulum are still in effect. In contrast, the dynamics when the springs are engaged (each spring is active in a comparatively smaller region of state space) may rely heavily on a set of eigenfunctions that are only locally relevant.

While I believe that this is an interesting thought, it is worth noting that this intuitive motivation is not necessarily backed up with a rigorous mathematical understanding. Nevertheless, we can empirically test whether the approach can lead to improved results.

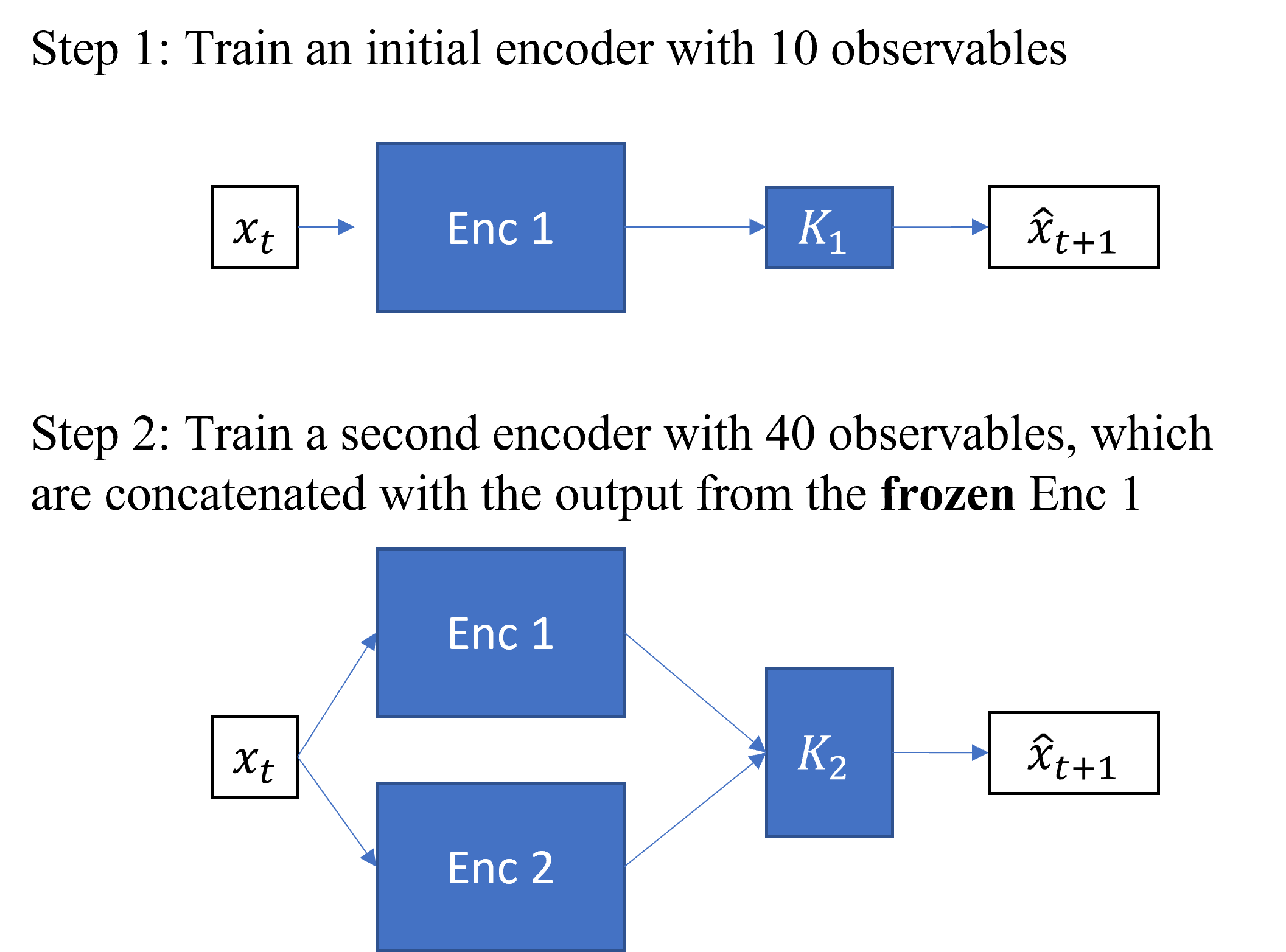

In contrast to the curriculum learning approach, we have only a single set of data: that generated from a model of a pendulum with a spring stiffness of $k=1000$. Instead of the standard approach of DKN, where a larger number of observables is considered to (in general) allow for a system to be more easily linearized, we deliberately constrain the latent space dimension to be small. The intention is for this restriction to limit the number of observable functions that the model can represent, encouraging it to learn observables with a low spatial frequency and which are relevant across a larger region of state space. In our system of interest, this would be observable functions that represent the dynamics of the pendulum without the springs.

Once we have initially trained this smaller model, we use its encoder within a larger model. This initial encoder is kept fixed in future training processes so that it continues to represent the same set of observables. An additional encoder is then then in the larger model, with the goal being to learn additional observables capable of making up for the initial model’s deficiencies. If the initial model learned the low spatial frequency observables as hoped, then we would expect this additional encoder to learn observables that are more relevant in areas where the springs are exerting a force on the pendulum. In practice, we could see this as a particular form of curriculum learning where the complexity of the model is increased over time. A key difference here compared to traditional approaches is that instead of increasing the complexity of the model by adding layers depth-wise, we are effectively increasing the width of the model by giving it the ability to learn additional observables.

The Model

To reduce the influence that other factors may have in the results of our experiments, I sought to minimize any changes to the overall structure of the DKNs being used, save for those being studied. Chief among these was the number of hidden layers in the network, the loss function being used, and the input. Other variables, such as the optimizer being used, the batch size, and the learning rate, were also kept as unchanged as feasible. The need to tune each of these other hyperparameters and the challenges in doing so are well-documented in the machine learning field, and as such I won’t spend any additional time describing the processes involved.

The general encoder architecture of the networks being used was as follows, with $D_x$ being the number of states (2, in the case of the pendulum) and $D_e$ being the number of observables:

| Layer | Input Dimensions | Output Dimensions | Nonlinearity |

|---|---|---|---|

| Linear | $D_x$ | 16 | ReLU |

| Linear | 16 | 16 | ReLU |

| Linear | 16 | $D_e$ | None |

In addition to the encoder network, a linear layer was present to determine the time evolution of the observables. For this linear layer, the input and output dimensions were both D_e + D_x since our final set of observables always had the system’s states concatenated onto those learned by the encoder.

The loss function that I used was composed of two main components: a loss related to the time evolution of the observables being output by the encoder, and a loss related to the time evolution of the state variables. In the literature, additional loss terms are often included to help regularize the network during training. These were not found to be significant in the testing done for this report, however and so were excluded. Tests were also done with different weights between the state loss and the observable loss, with an equal balance between the two found to provide reasonable outcomes. Another hyperapameter that we needed to tune is for how many time steps to enforce a loss on the values predicted by the model. In this report, we stuck to 30 time steps although significant experimentation was not done to explore how varying this parameter may have affected the results. We did briefly look into whether having a weight on any of the loss terms which decayed over time would improve training and did not see any immediate benefits.

\(\mathrm{loss}=\mathrm{multistep\_loss\_state}+\mathrm{multistep\_loss\_observables}\) \(\mathrm{multistep\_loss\_state}=\sum^{30}_{t=1}\lvert\lvert(\psi(\textbf{x}_t)-K^t\psi(\textbf{x}_0))[:2]\rvert\rvert_{\mathrm{MSE}}\) \(\mathrm{multistep\_loss\_observables}=\sum^{30}_{t=1}\lvert\lvert(\psi(\textbf{x}_t)-K^t\psi(\textbf{x}_0))[2:]\rvert\rvert_{\mathrm{MSE}}\)

Analysis

Curriculum Learning

The initial model for stiffness $k=0$ was trained on the simple pendulum dynamics for 600 epochs, and served as the pre-trained model for this approach. Subsequent models were each trained for 200 epochs with the Adam optimizer and a decaying learning rate scheduler. When analyzing the performance of these models, we looked at how the error for a set of trajectories not in the training set evolved over time.

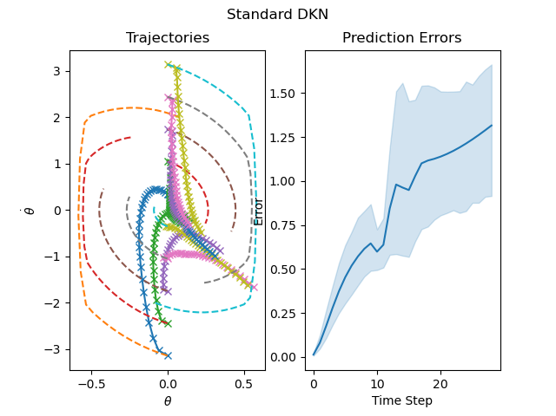

By this metric, we observe the performance of the model gradually getting worse. While this on its own is not too surprising, the final model ends up performing significantly worse than a DKN with the equivalent number of observables trained from scratch. Interestingly, it looks like the final model is unstable, with the trajectories blowing up away from the origin. Looking into this, issues surrounding the stability of linearized models is not a new phenomenon in the field of Koopman linearizations. Prior works have proposed several methods to help alleviate this issue, such as by adding an addition term to the loss function which stabilizes the time-evolution matrix. While there was no time to implement this change for this report, it could be an interesting modification to attempt for future work.

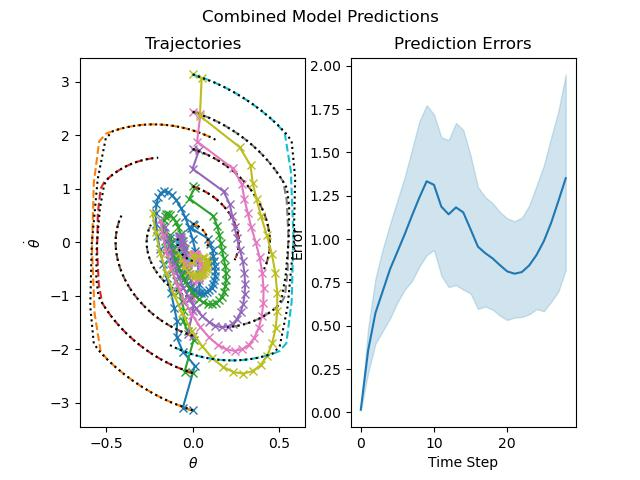

Learning New Observables

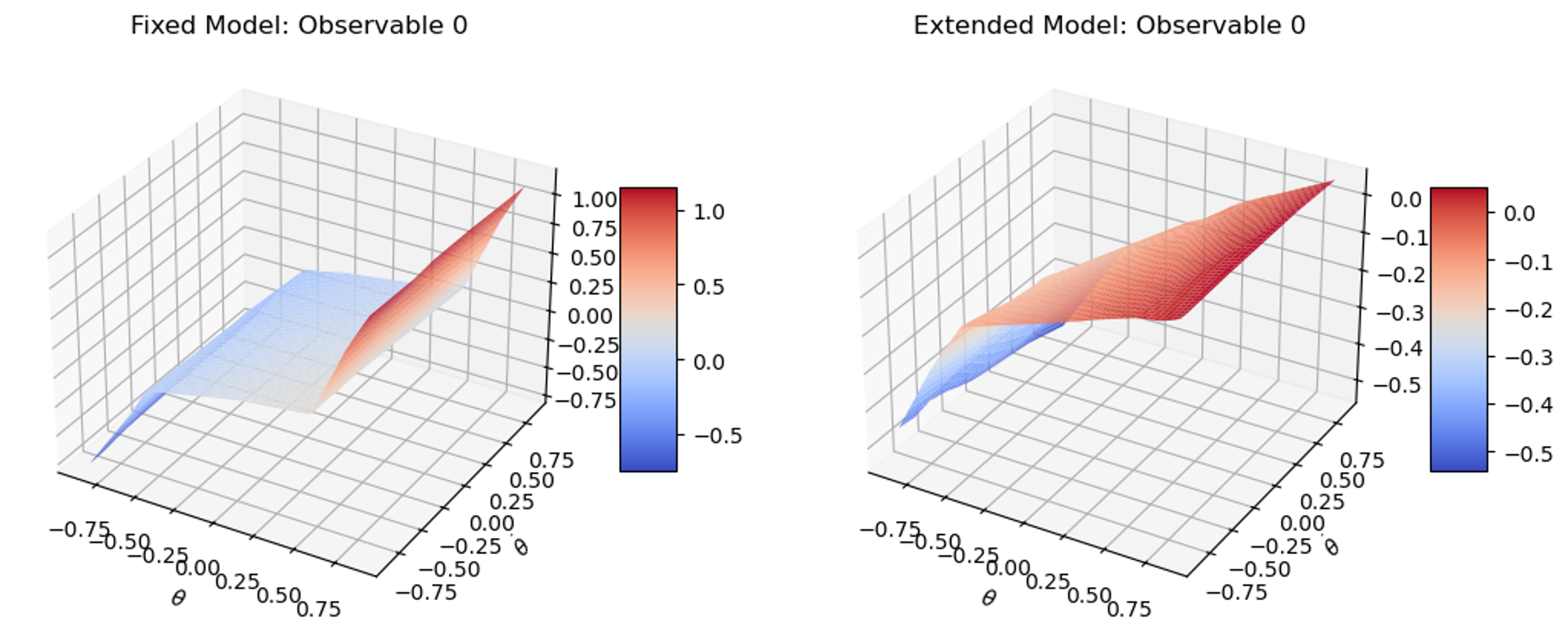

While trying to gradually learn additional observables for the model, we started with a network that learned 10 observable functions and trained it for 600 epochs. Once this process was complete, an extended model learned an additional 40 observable functions for an additional 600 epochs. The end result was comparable in performance to a single aggregate model of 50 observables trained from scratch. The aggregate model did appear to specifically outperform our gradually trained model during the initial time steps, while slightly underperforming in comparison at the later time steps. This may be due to some differences in the stability of the two learned linear models, although further investigation would be needed to verify this. Part of the motivation for this method was the hope that the network would learn locally relevant observable functions. The learned observables were plotted on a grid to visualize them and see if this were the case, but not distinctive, qualitative features indicating that different observables were learned for different regions of state space.

Conclusion

In this project, we sought to test two modifications to a DKN training scheme on an example of a piecewise dynamical system. By using a curriculum learning process or gradually increasing the number of observable functions, we hypothesized that the DKN would show better performance than an aggregate model trained from scratch. Ultimately, we found that neither of the proposed methods led to significant improvements.

One of the potential causes of underperformance is the learned linear models’ instability. While this is a known issue regarding lifted linearization techniques

It is also worth considering the severe limitations of this study, imposed upon it by the need to tune a wide variety of hyperparameters. Even in the process of creating a linear model for the simple pendulum, I observed a wide range of performance based upon how the cost function or learning rate were varied. While some effort was taken to tune these and other hyperparameters for the models I explored, this process was far from exhaustive.

Moreover, the proposed changes to the typical DKN architecture only served to add additional hyperparameters into the mix. What spring stiffnesses should be used during curriculum learning? Should the learning rate be decreased between different curriculums, or should the number of epochs be varied? How about the ratio of observables between the two models used in the second approach, is a 10:40 split really optimal? Some variations of these hyperparameters were considered during this project, but again an exhaustive search for optimal values was impossible.

This means that there is a chance that I simply used the wrong selection of hyperparameters to see better performance from the tested approaches, it highlights the sensitivity that I observed in the performance of the DKNs. Even beyond the considerations described thus far, there are further considerations that can impact the structure and performance of learned linearizations. Some approaches augment the state variables with time-delayed measurements, for example. In other cases, the state variables are not included as observables and are instead extracted using a decoder network. This latter case is of particular interest, since recent work in the field has identified that certain types of nonlinear systems are impossible to linearize with a set of observables that include the states.

Ultimately, while the experiments in this project didn’t agree with my hypothesis (and resulted in some underwhelming predictive performance) I gained a newfound appreciation for the process of training these models along the way.