Tracing the Seeds of Conflict: Advanced Semantic Parsing Techniques for Causality Detection in News Texts

This blog post outlines a research project aiming to uncover cause-effect-relationships in the sphere of (political) conflicts using a frame-semantic parser.

Introduction

“In the complex world of political conflicts, understanding the underlying dynamics can often feel like trying to solve a puzzle with missing pieces. This project attempts to find those missing pieces through a novel approach that combines the insights of qualitative research with the precision of quantitative analysis.”

Retrieved from https://conflictforecast.org

Political conflicts are multifaceted and dynamic, posing significant challenges for researchers attempting to decode their intricate patterns. Traditional methods, while insightful, often grapple with the dual challenges of scale and specificity. This project embarks on an innovative journey to bridge this gap, leveraging a frame-semantic parser to illustrate its applicability for the task and to discuss an approach to achieve domain-specificity for the model using semantic similarity. By synthesizing the depth of qualitative research into the scalability of quantitative methods, we aim to contribute to more informed analyses and actions in low-resource, low-tech domains like conflict studies.

On this journey, the projects key contributions are:

-

Advancing Frame-Semantic Parsing in Conflict Research: We introduce the frame-semantic parser, a method that brings a high degree of explainability to conflict studies. Particularly when used in conjunction with news articles, this parser emerges as a powerful tool in areas where data is scarce, enabling deeper insights into the nuances of political conflicts.

-

Harnessing Semantic Similarity for Domain Attunement: The project underscores the significance of semantic similarity analysis as a precursor to frame-semantic parsing. This approach finely tunes the parser to specific thematic domains, addressing the gaps often present in domain distribution of common data sources. It illustrates how tailoring the parser input can yield more contextually relevant insights.

-

Demonstrating Domain-Dependent Performance in Frame-Semantic Parsing: We delve into the impact of thematic domains on the performance of a transformer-based frame-semantic parser. The research highlights how the parser’s effectiveness varies with the domain of analysis, primarily due to biases and structural peculiarities in the training data. This finding is pivotal for understanding the limitations and potential of semantic parsing across different contexts.

-

Developing Domain-Specific Performance Metrics: In environments where additional, domain-specific labeled test data is scarce, the project proposes an intuitive method to derive relevant performance metrics. This approach not only aligns the evaluation more closely with the domain of interest but also provides a practical solution for researchers working in resource-constrained settings.

Literature Background

Qualitative Research on Conflicts

Qualitative research has long been a cornerstone in the study of political conflicts. This body of work, now well-established, emphasizes the unique nature of each conflict, advocating for a nuanced, context-specific approach to understanding the drivers and dynamics of conflicts. Researchers in this domain have developed a robust understanding of the various pathways that lead to conflicts, highlighting the importance of cultural, historical, and socio-political factors in shaping these trajectories. While rich in detail and depth, this approach often faces challenges in scalability and systematic analysis across diverse conflict scenarios.

The Role of Quantitative Methods

The emergence of computational tools has spurred a growing interest in quantitative approaches to conflict research. These methods primarily focus on predicting the severity and outcomes of ongoing conflicts, with some success

Bridging the Gap with Explainable Modeling Approaches

The challenge now lies in bridging the insights from qualitative research with the systematic, data-driven approaches of quantitative methods. While the former provides a deep understanding of conflict pathways, the latter offers tools for large-scale analysis and prediction. The key to unlocking this synergy lies in developing advanced computational methods to see the smoke before the fire – identifying the early precursors and subtle indicators of impending conflicts

Data

The project capitalizes on the premise that risk factors triggering a conflict, including food crises, are frequently mentioned in on-the-ground news reports before being reflected in traditional risk indicators, which can often be incomplete, delayed, or outdated. By harnessing newspaper articles as a key data source, this initiative aims to identify these causal precursors more timely and accurately than conventional methods.

News Articles as Data Source

News articles represent a valuable data source, particularly in research domains where timely and detailed information is crucial. In contrast to another “live” data source that currently revels in popularity amongst researchers - social media data - news articles are arguably less prone to unverified narratives. While news articles typically undergo editorial checks and balances, ensuring a certain level of reliability and credibility, they certainly do not withstand all potential biases and are to be handled with caution - as arguably every data source. To counteract potential biases of individual news outputs, accessing a diverse range of news sources is essential. Rather than having to scrape or otherwise collect data on news articles, there is a set of resources available:

-

NewsAPI: This platform provides convenient access to a daily limit of 100 articles, offering diverse query options. Its integration with a Python library streamlines the process of data retrieval. However, the limitation lies in the relatively small number of data points it offers, potentially restricting the scope of analysis.

-

GDELT Database: Renowned for its vast repository of historical information spanning several decades, GDELT stands as a comprehensive data source. Its extensive database is a significant asset, but similar to NewsAPI, it predominantly features article summaries or initial sentences rather than complete texts, which may limit the depth of analysis.

-

Factiva: A premium service that grants access to the complete bodies of articles from a plethora of global news sources in multiple languages. While offering an exhaustive depth of data, this resource comes with associated costs, which may be a consideration for budget-constrained projects.

-

RealNews: As a cost-free alternative, this dataset encompasses entire newspaper articles collated between 2016 and 2019. Selected for this project due to its unrestricted accessibility and comprehensive nature, it provides a substantial set of articles, making it a valuable resource for in-depth analysis.

Descriptive Analysis of the Data

The analysis delved into a selected subset of 120,000 articles from the RealNews open-source dataset. This subset was chosen randomly to manage the extensive scope of the complete dataset within the project’s time constraints. Each article in this subset provided a rich array of information, including url, url_used, title, text, summary, authors, publish_date, domain, warc_date, and status.

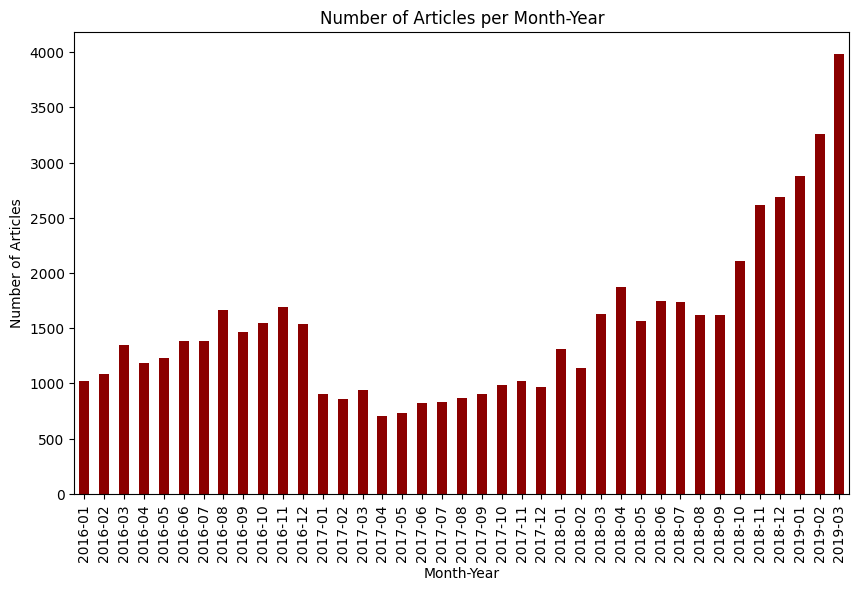

The range of articles spans from 1869 to 2019, but for focused analysis, we narrowed the scope to articles from January 2016 through March 2019. This temporal delimitation resulted in a dataset comprising 58,867 articles. These articles originated from an expansive pool of 493 distinct news outlets, offering a broad perspective on global events and narratives. The distribution of these articles across the specified time frame provides the expected observation of increasing news reporting, as visualized below.

Counts of Articles over Time

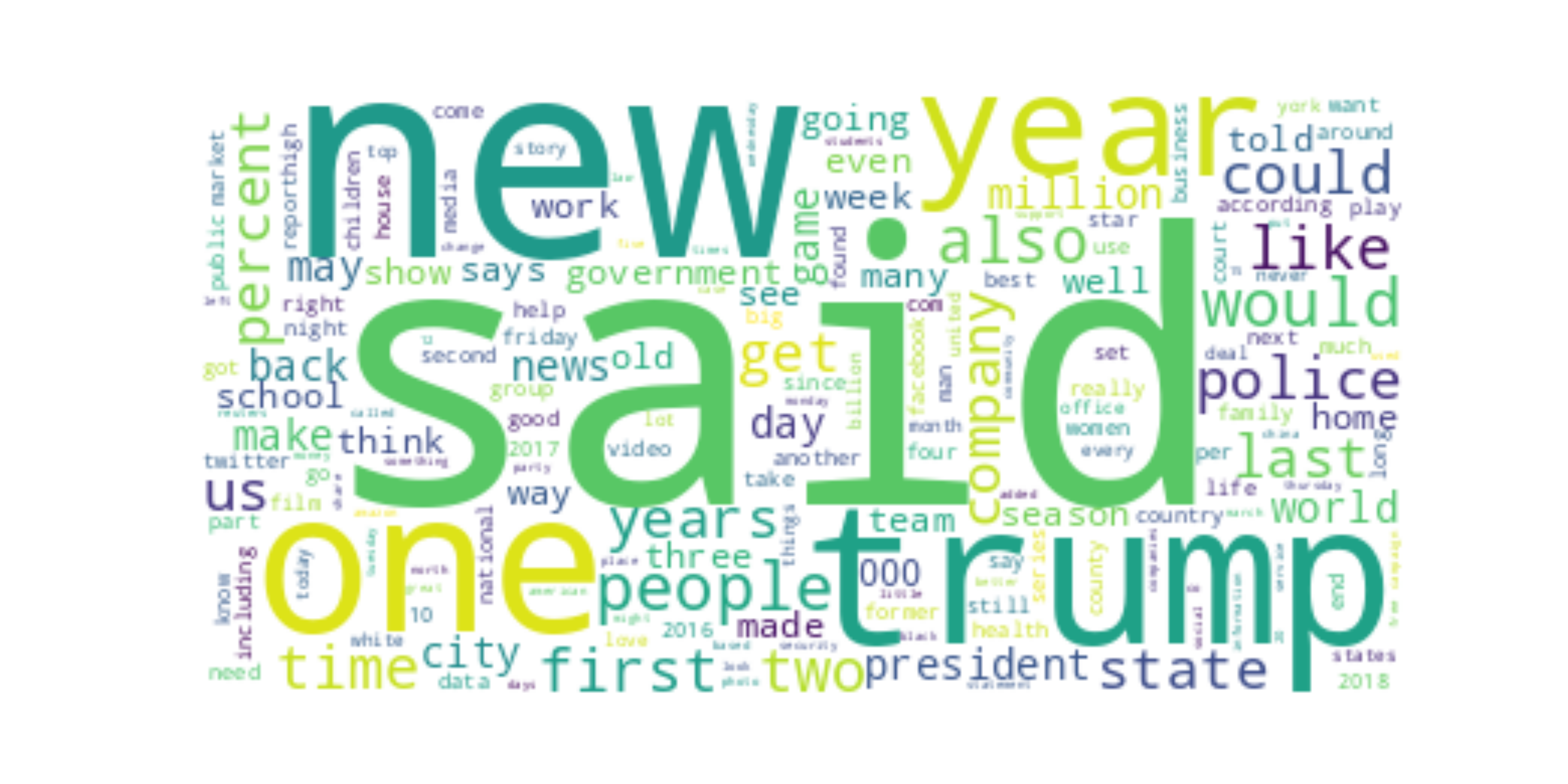

To understand the content of our dataset’s news articles better, we utilized the TfidfVectorizer, a powerful tool that transforms text into a numerical representation, emphasizing key words based on their frequency and distinctiveness within the dataset. To ensure focus on the most relevant terms, we filtered out commonly used English stopwords. The TfidfVectorizer then generated a tf-idf matrix, assigning weights to words that reflect their importance in the overall dataset. By summing the Inverse Document Frequency (IDF) of each term, we obtained the adjusted frequencies that helped identify the most influential words in our corpus. To visually represent these findings, we created a word cloud (see below), where the size of each word correlates with its relative importance.

Word Cloud for Entire News Article Dataset (tf-idf adjusted)

Methodology

We showcase the applicability of a frame-semantic parsing to the study of conflicts and inform the model with domain-specific seed phrases identified through semantic similarity analysis. This approach not only demonstrates the effectiveness of the method in conflict studies but also showcases how domain-specific applications of deep learning tasks can be accurately applied and measured. Thus, we not only validate the utility of frame-semantic parsing in conflict analysis but also explore innovative ways to tailor and evaluate domain-specific performance metrics.

The Frame-Semantic Parser

Contextualizing the Approach

In the pursuit of bridging the gap between the robust theoretical understanding of conflict dynamics and the practical challenges in data availability, the frame-semantic parser emerges as a promising methodological tool. In a recent study (

Retrieved from https://github.com/swabhs/open-sesame

How Does a Frame-Semantic Parser Work?

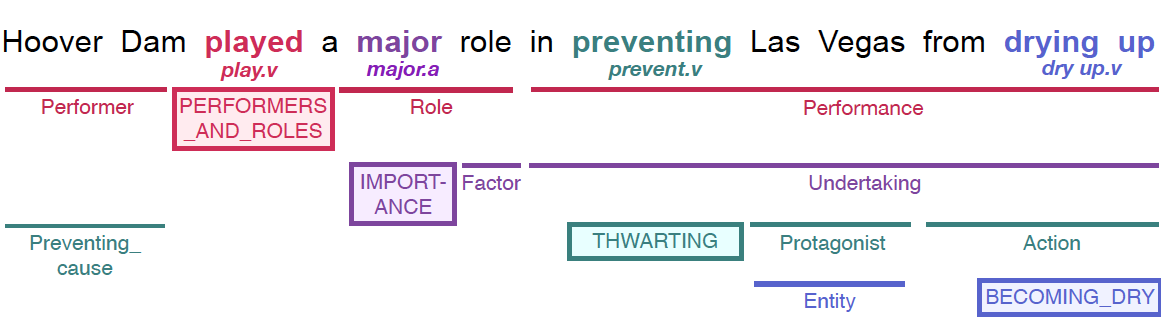

At the heart of frame-semantic parsing, as conceptualized by

The process of frame-semantic parsing constitutes three subtasks:

-

Trigger Identification: This initial step involves pinpointing locations in a sentence that could potentially evoke a frame. It’s a foundational task that sets the stage for more detailed analysis.

-

Frame Classification: Following trigger identification, each potential trigger is analyzed to classify the specific FrameNet frame it references. This task is facilitated by leveraging lexical units (LUs) from FrameNet, which provide a strong indication of potential frames.

-

Argument Extraction: The final task involves identifying the frame elements and their corresponding arguments within the text. This process adds depth to the frame by fleshing out its components and contextualizing its application within the sentence.

While frame-semantic parsers have arguably not received as much attention as other language modeling methods, three major contributions of the past few years can be highlighted.

Implementation of the Frame-Semantic Parser

The implementation of our frame-semantic parser involves several key steps. We begin by splitting our text data into sentences using a split_into_sentences function. This granular approach allows us to focus on individual narrative elements within the articles and since frame-semantic parsers are reported to perform better on sentence-level

In the heart of our methodology, we utilize various functions to extract and filter relevant frames from the text. Our extract_features function captures the full text of each frame element, ensuring a comprehensive analysis of the semantic content. The filter_frames function then refines this data, focusing on frames that are explicitly relevant to conflict, as informed by research on causal frames in FrameNet.

To optimize the performance of our transformer-based parser, we build a process_batch function. This function handles batches of sentences, applying the frame semantic transformer model to detect and filter frames relevant to our study.

Our approach also includes a careful selection of specific frames related to causality and conflict as we are interested in these frames and not just any. We rely on both manually identified frame names (informed by

The implementation is designed to be efficient and scalable, processing large batches of sentences and extracting the most relevant semantic frames. This approach enables us to parse and analyze a substantial corpus of news articles, providing a rich dataset for our conflict analysis.

Seed Selection via Semantic Similarity Analysis to Inform Causal Modeling

Understanding Semantic Similarity

Semantic similarity plays a pivotal role in our methodology, serving as the foundation for expanding our understanding of how conflict is discussed in news articles. By exploring the semantic relationships between words and phrases, we can broaden our analysis to include a diverse array of expressions and viewpoints related to conflict. This expansion is not merely linguistic; it delves into the conceptual realms, uncovering varying narratives and perspectives that shape the discourse on conflict.

How Do We Compute Semantic Similarity?

To compute semantic similarity and refine our seed phrases, we employ a combination of distance calculation and cosine similarity measures. We begin with a set of initial key phrases conflict, war, and battle, ensuring they capture the core essence of our thematic domain. We then leverage pretrained word embeddings from the Gensim library to map these phrases into a high-dimensional semantic space. We also experimented with more sophisticated embedding approaches (like transformer-based) to compute the semantic similarity and thus obtain the seeds. When trading off complexity/time and performance the simpler pretrained Gensim model preservered.

Our methodology involves generating candidate seeds from our corpus of documents, including unigrams, bigrams, and trigrams, with a focus on those containing key words related to conflict. We filter these candidates based on their presence in the word vectors vocabulary, ensuring relevance and coherence with our seed phrases.

Using functions like calculate_distances and calculate_cosine_similarity, we measure the semantic proximity of these candidates to our initial seed phrases. This process involves averaging the distances or similarities across the seed phrases for each candidate, providing a nuanced understanding of their semantic relatedness.

The candidates are then ranked based on their similarity scores, with the top candidates selected for further analysis. This refined set of seed phrases, after manual evaluation and cleaning, forms the basis of our domain-specific analysis, guiding the frame-semantic parsing process towards a more focused and relevant exploration of conflict narratives.

Domain-Specific Metrics

In the final stage of our methodology, we integrate the identified seed phrases into the frame-semantic parser’s analysis. By comparing the model’s performance on a general set of sentences versus a subset containing at least one seed phrase, we assess the model’s domain-specific efficacy. This comparison not only highlights the general capabilities of large language models (LLMs) but also underscores their potential limitations in domain-specific contexts.

Our approach offers a pragmatic solution for researchers and practitioners in low-resource settings. We demonstrate that while general-purpose LLMs are powerful, they often require fine-tuning for specific domain applications. By utilizing identified domain-specific keywords to construct a tailored test dataset, users can evaluate the suitability of general LLMs for their specific needs.

In cases where technical skills and resources allow, this domain-specific dataset can serve as an invaluable tool for further refining the model through data augmentation and fine-tuning. Our methodology, therefore, not only provides a robust framework for conflict analysis but also lays the groundwork for adaptable and efficient use of advanced NLP tools in various thematic domains.

We present the results for these domain-specific measure for F1 score, recall, and precisions. Likewise, to illustrate performance differences across domains, we conducted the entire approach also for the finance domain, starting with the keywords finance, banking, and economy.

Findings & Insights

Frame-Semantic Parser Identifies Causal Frames Reliably

In this stage, we assess if the methodology is truly applicable to the domain of conflicts and for the use with news article data. We find that of our 37 identified cause-effect related frames, all are represented with various instances in our dataset. In fact, as few as 1,600 randomly selected news articles (processed in 100 batches of 16 batch samples) suffice to cover all cause-effect related frames. Therefore, for this intermediate step of the project, we gather support that the parser is in-fact applicable to news article data.

Differences in Seed Phrase Selection

We make one major observation between the results of the finance- versus conflict-specific seed selection for downstream use. Potentially driven by the fact that conflicts are drastically driven by geographic labels and information, a number of the top 50 seed phrases were geographic terms like “Afghanistan.” Since we did not want to bias the downstream evaluation of our domain-specific metrics we excluded these seed phrases and continued the analysis with 34 seeds. In contrast, the top 50 finance-specific seed phrases obtained from the semantic analysis were neither geographic nor linked to individual (financial) historic events, wherefore we continued the downstream analysis with all top 50 seed phrases. Already here we can observe the deviances across domains, given more support to the idea of domain-specific evaluation and metrics.

Employing Domain-Specific Performance Metrics

Our research involved an extensive evaluation of the frame-semantic parser, based on a transformer architecture, across various configurations and domain-specific datasets. We began by rebuilding and training the model using the vanilla code and a smaller model size without hyperparameter tuning. Subsequently, we fine-tuned the hyperparameters to match the baseline performance levels. After this, we move to one of the main contributions of this project: the domain-specific evaluation. The evaluation was carried out on domain-specific validation and test datasets, curated using seed words from finance and conflict domains to highlight differences across domains.

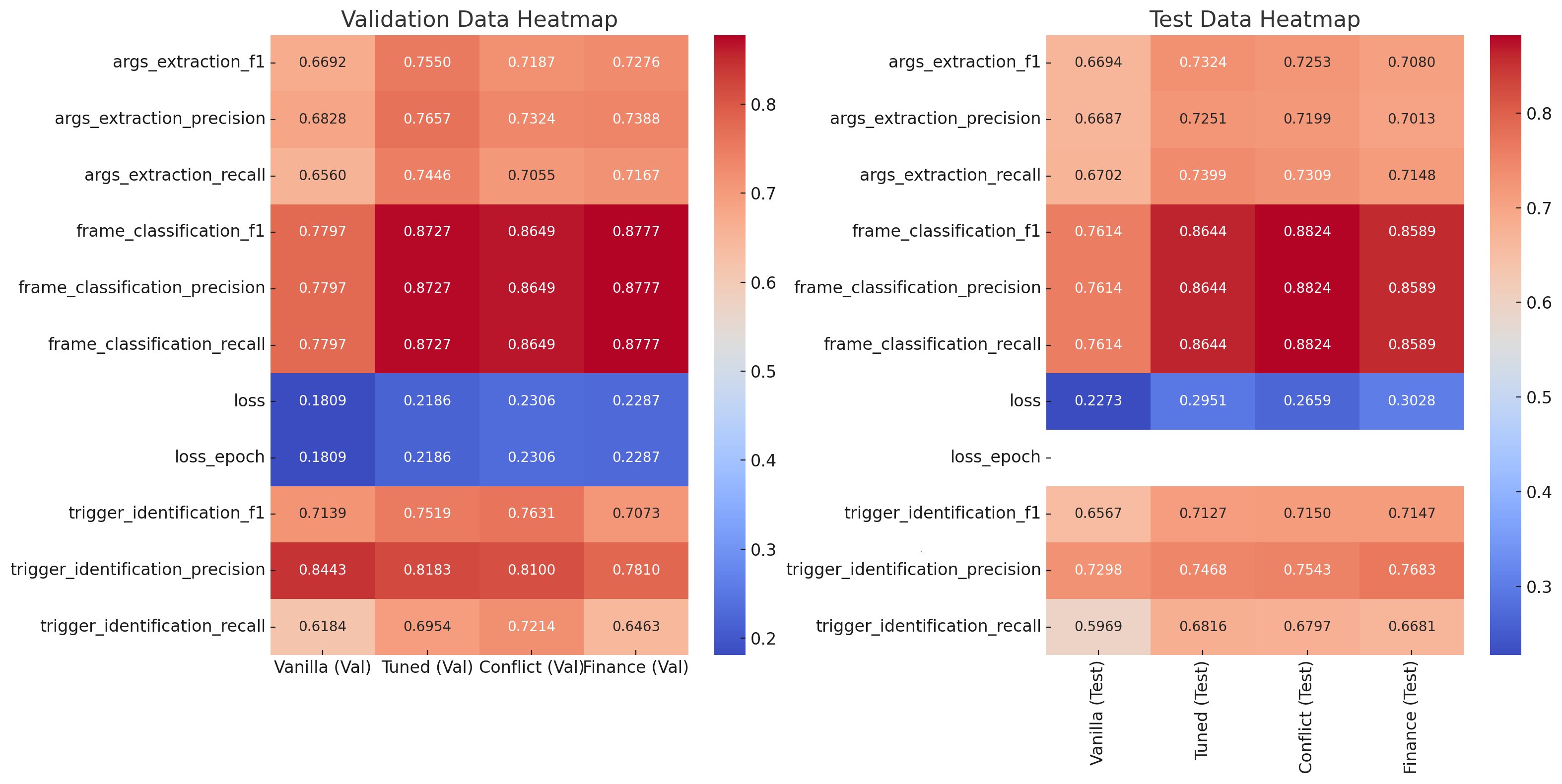

The untuned model (validation n = 646, test n = 1891) showed an argument extraction F1 score of 0.669 and a loss of 0.181 on the validation set. On the test set, it presented a slightly similar F1 score of 0.669 and a loss of 0.227. Hyperparameter-Tuned Performance

Post hyperparameter tuning, there was a notable improvement in the model’s validation performance (n = 156), with the F1 score for frame classification reaching as high as 0.873, and the precision for trigger identification at 0.818. The test metrics (n = 195) also showed consistent enhancement, with the F1 score for frame classification at 0.864 and trigger identification precision at 0.747.

When evaluated on domain-specific datasets, the model exhibited varying degrees of effectiveness which showcases our assumption that domains matter to the applicability of LLMs to domain-specific tasks and that our simple proposed way of generating domain-specific metrics can give insights on that. For the conflict keywords (validation n = 121, test n = 255), the model achieved a validation F1 score of 0.865 for frame classification and 0.764 for trigger identification precision. However, for the finance domain (validation n = 121, test n = 255), the F1 score for frame classification was slightly higher at 0.878, while the trigger identification precision was lower at 0.781 compared to the conflict domain.

The results indicate that the hyperparameter-tuned model significantly outperforms the vanilla model across all metrics. Additionally, domain-specific tuning appears to have a considerable impact on the model’s performance, with the finance domain showing slightly better results in certain metrics compared to the conflict domain. These insights could be pivotal for further refinements and targeted applications of the frame-semantic parser in natural language processing tasks. Moreover, these observation fit our general understanding of the two domains. Reports on conflicts are likely to discuss the involved parties’ reasons for specific actions like attacks on certain targets. Additionally, the actions in conflicts are arguably more triggering events than “the good old stable economy.” Certainly, this research project can only be the beginning of a more rigorous assessment, but these findings show great promise of the idea of generating and evaluating simple, domain-specific performance metrics.

Performance Evaluation of Frame-Semantic Parser

Conclusion & Limitations

This project has embarked on an innovative journey, merging advanced natural language processing techniques with the intricate study of conflict. By harnessing the power of a transformer-based frame-semantic parser and integrating semantic similarity analysis, we have made significant strides in identifying causal relationships within news articles. This methodology has not only illuminated the dynamics of conflict as portrayed in media but also demonstrated the adaptability and potential of frame-semantic parsing in domain-specific applications.

Key Findings

-

Utility of Frame-Semantic Parsing: Our work has showcased the frame-semantic parser as a valuable and explainable tool, particularly effective in data-scarce environments like conflict research. Its ability to contextualize information and discern nuanced semantic relationships makes it an indispensable asset in understanding complex thematic domains.

-

Semantic Similarity for Domain-Specific Perspective: We illustrated the effectiveness of using semantic similarity to refine seed phrases, thereby tailoring the frame-semantic parser to the specific domain of conflict. This approach has proven to be a straightforward yet powerful means to customize advanced NLP models for targeted analysis.

-

Dependence on Domain for Model Performance: Our findings highlight a significant insight: the performance of general-purpose language models can vary depending on the domain of application. This observation underscores the need for domain-specific tuning to achieve optimal results in specialized contexts.

-

Development of Domain-Specific Performance Metrics: We proposed and validated a practical approach to developing domain-specific metrics, especially useful in resource-constrained environments. This methodology enables a nuanced evaluation of model performance tailored to specific thematic areas.

Limitations & Future Research

Despite the promising results, our project is not without its limitations, which pave the way for future research opportunities:

-

Data Dependency: The effectiveness of our approach is heavily reliant on the quality and diversity of the news article dataset. Biases in media reporting or limitations in the scope of articles can skew the analysis and affect the accuracy of the results. In an extended version of the project - and with funding - one could switch to the Factiva dataset.

-

Applicability of Domain-Specificity to Other Themes: While our method has shown efficacy in the context of conflict analysis, its applicability to other specific domains requires further exploration. Future research could test and refine our approach across various thematic areas to assess its broader utility.

-

Model Complexity and Interpretability: While we have emphasized the explainability of the frame-semantic parser, the inherent complexity of transformer-based models can pose challenges in terms of scaling and deployment. Future work could focus on simplifying these models without compromising their performance - for instance via pruning and quantization.

-

Expansion of Semantic Similarity Techniques: Our semantic similarity analysis was instrumental in refining seed phrases, but there is room for further enhancement. Incorporating more advanced semantic analysis techniques could yield even more precise and relevant seed phrases. While we found alternative methods, like BERT-based approaches to not yield significant improvements, ever more models flood the market.

-

Integration with Other Data Sources: Expanding the dataset beyond news articles to include social media, governmental reports, or academic literature could provide a more holistic view of conflict narratives and their causal relations.

In conclusion, our project represents a significant step forward in the intersection of natural language processing and conflict research. By addressing these limitations and building on our foundational work, future research can continue to push the boundaries of what is possible in this exciting and ever-evolving field.